AI Journey - Baby Steps

Welcome!

Unless you have been living under rock, you probably know that AI is taking the world by storm, its the next big thing...so they say. OpenAI and their premier tool ChatGPT have semi-revolutionized how people work in their day-to-day lives and with the rise of AI agents they say its going to change how we work. So if you have stumbled upon this article, I welcome you to the journey of figuring out AI (without a PHD), how you can leverage it using open-source models instead of using just plain old ChatGPT...so lets begin!

What are Open-Source models?

Open source models are software that are freely avaiable to any to use, study, modify, and distribute (under open-source licenses), these models are normally pre-trained on the datasets so you can quickly load them up and begin playing with them. Now, I am sure there are a few sites that host open-source models but the site I am primarly going to use in this journey is Hugging Face this site is the bees knees for open-source models and datasets. You first need to make an account (which is free) and from there the world is your oyster.

But where and how are we going to run these models?

What a wonderful question! If you don't have a powerful GPU on your computer, how in the world are you going to load and run these open-source models? Well, we are going to be leveraging Google Colab, a free cloud based service that is provided by Google where we can execute Python code (note: within reason, if you expect to load a large model in the free version more than likely you will eat up all the free runtime). With the free version however we can get away with using smaller model to do some fun things. Now I mentioned Python and that can be scary, but don't fret its not that bad.

Getting started

When you first load up Google Colab you are met with a blank cell much like that of a Juptyer notebook.

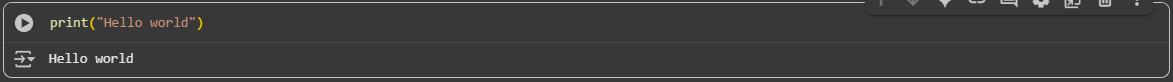

Just to make sure it all works go ahead and do a good ole "Hello world".

print("Hello world")You can either click the play button or do CTRL + Enter to run the code block. If all goes well you'll see an output like the one below.

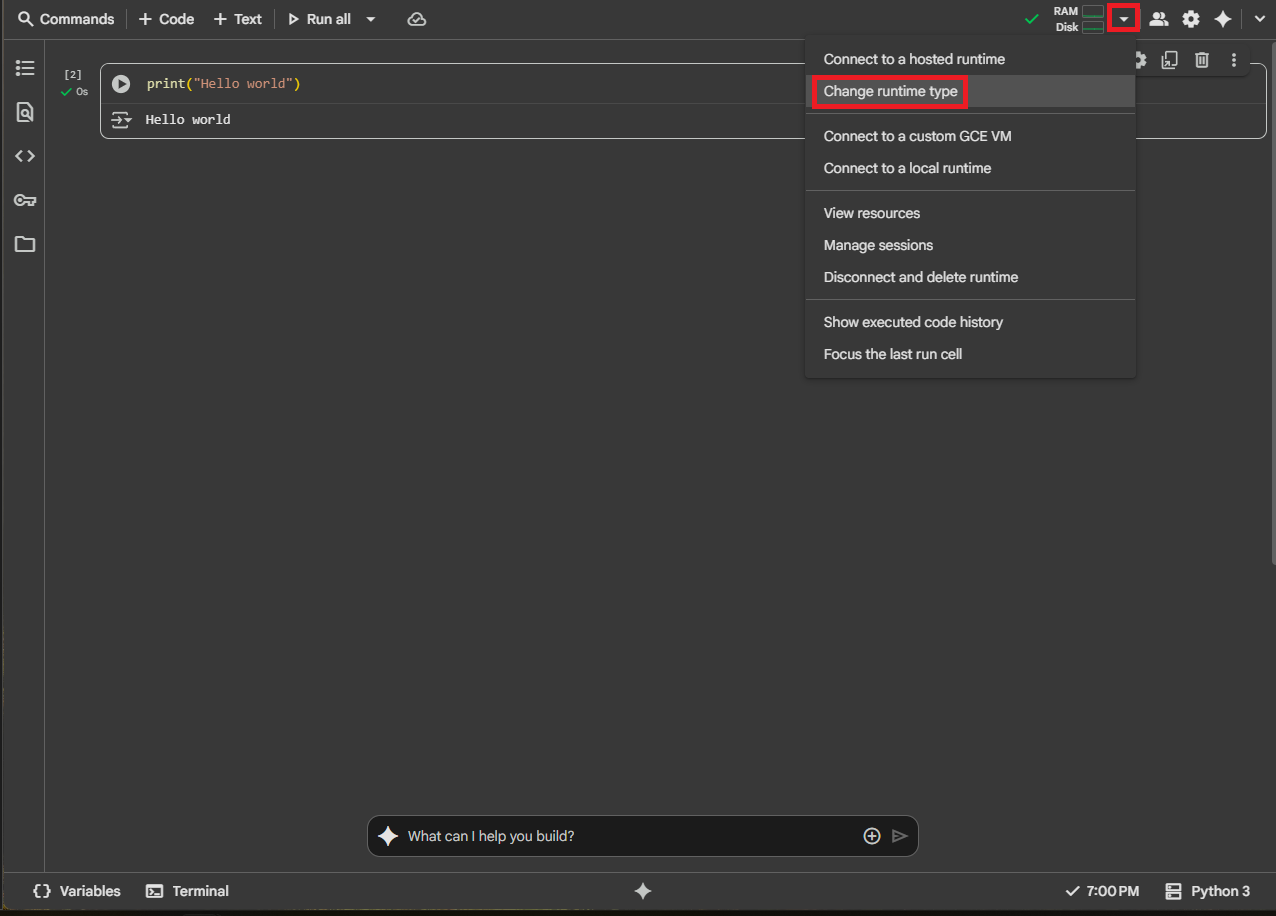

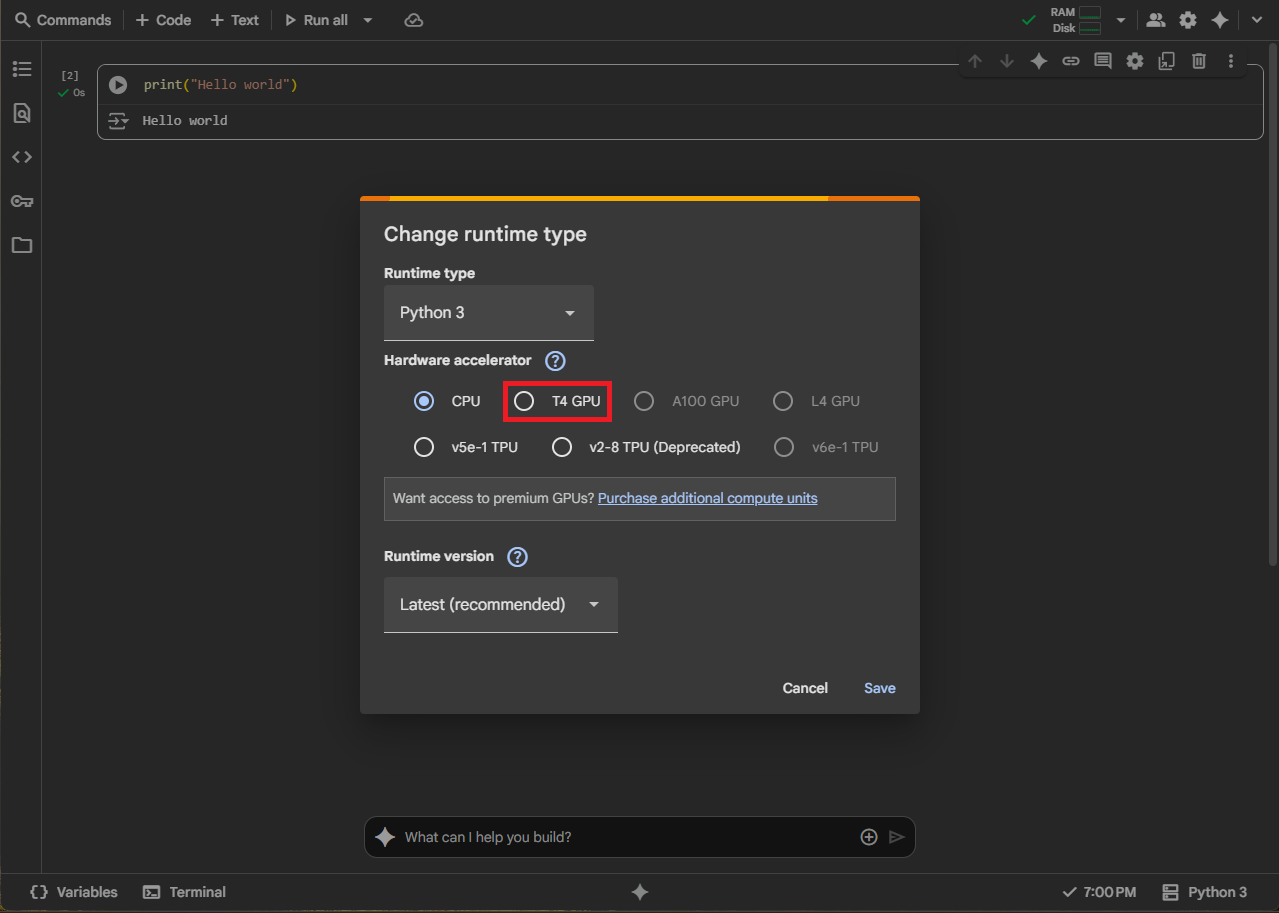

Since we have a working environement one of the first things we want to do before we start loading in packages and writing some code is to change the runtime to T4 GPU since it defaults to just running off the CPU. To change the run time hit the dropdown arrow next to the resources and select "Change runtime type".

From the screen, select the T4 GPU. It will ask if you want to "Disconnect and delete the runtime" go ahead and select OK since we dont have anything in the session.

The fun stuff

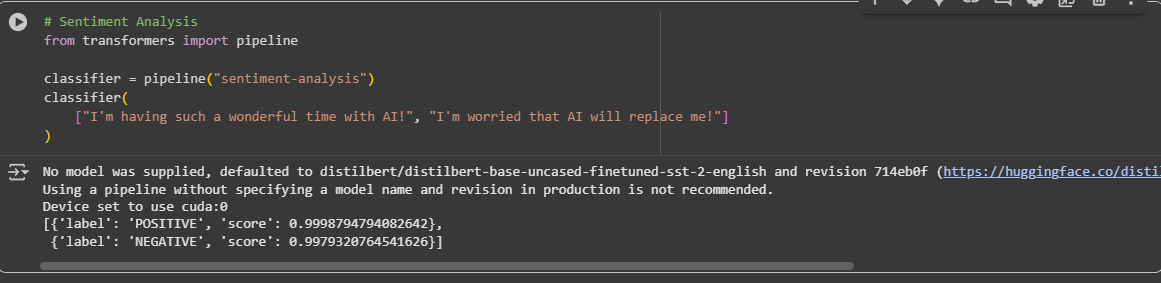

After switching from CPU to the GPU we can finally break into the good stuff, the moment you have been waiting for...running some real LLM code. Since we are starting this journey off we are gonna take it a little slow and leverage the HuggingFace LLMs Course mainly cause I have zero clue aboot what I am doing! One of the first things that we can do attemt for sentiment analysis, I'm not going to go into great detail about what that is, if you are really curious give it a nice Google search. The code for testing this looks like the below, and you will notice if you have ever used HuggingFace in Google Colab that we arent loading in a specific model, dont worry though one will be supplied to us!

from transformers import pipeline

classifier = pipeline("sentiment-analysis")

classifier(

["I'm having such a wonderful time with AI!", "I'm worried that AI will replace me!"]

)

A little CTRL + Enter and we have some results!

You can see that by default if we dont supply a model it will use "distilbert/distilber-base-uncased-finetuned-sst-2-engligh, and both of our sentences got labels, one of them being high positive and the other being really negative which makes sense given the context of the sentences. Now lets try something a little more cool, how about some text generation (we are gonna build the next ChatGPT :) )

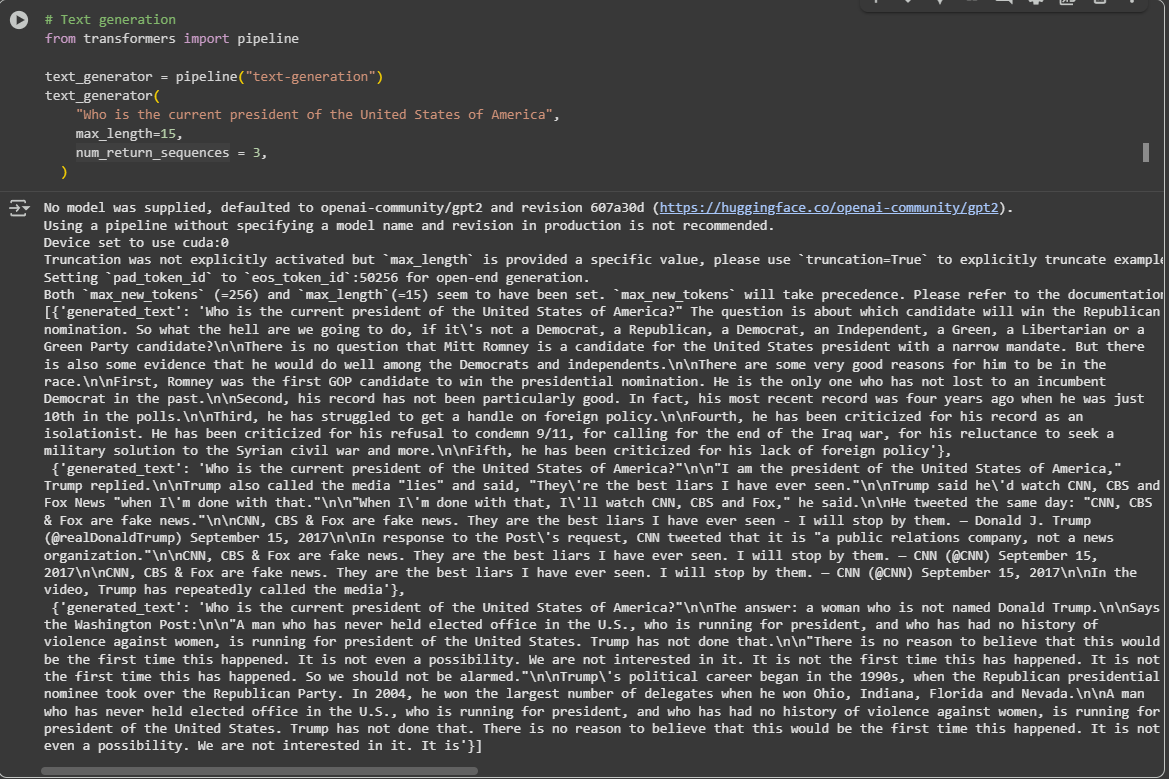

from transformers import pipeline

text_generator = pipeline("text-generation")

text_generator(

"Who is the current president of the United States of America"

max_length = 15 # this sets the limit on the amount of tokens the model will generate

num_return_sequences = 3 # this specifies the number of returned results we get from the model, default is 1 if not specified

)

Again we press CTRL + Enter and take a look at results that you get for that question, it's...rather interesting!

So maybe we wont be building the next ChatGPT from this, but as we progress maybe we will get some better answers. Continue going through the HuggingFace LLM course to get introduced more into how to use LLMs in the HuggingFace platform, I can tell you its definitely worth it.

Hopefully this peaked your interest and opened your eyes! Until next time!

No AI used in the making of this post that I know of atleast 😀